Written by

Published on

TLDR: We did reinforcement learning (RL) on GPT-5 to improve its kernel generation capability and published our findings on Arxiv. The paper reviews why supervised fine-tuning falls short, how RL is a natural fit, and goes through a fair amount of technical detail regarding dataset curation and environment setup. Correctness gains were impressive, speedup gains were modest, and building the right harness makes all the difference. Read the paper here.

Arxiv paper link: https://www.arxiv.org/abs/2602.11000

Large language model (LLM) generated GPU kernels are all the rage these days. This capability was once beyond the pale even for frontier labs. Today, however, models like GPT-5.x and agent harnesses like Claude Code are making the development of high performance computing applications more accessible than ever. Someone with little to no programming experience in this domain can write competent and performant GPU kernels, despite not totally understanding what each line does.

And yet, we are still hungry for faster, better, and more accurate models in this regard. This is compounded by the fact that new GPUs are coming out at 12 month cycles, each of them requiring kernels to be rewritten in order to take advantage of the latest and greatest hardware features. Even the best frontier models often struggle writing code for new hardware because of a relative lack in labeled training data that was incorporated into its training corpus. In our recent work, Fine-Tuning GPT-5 for GPU Kernel Generation, we use reinforcement learning with verifiable rewards to improve model performance without relying on any labeled training data.

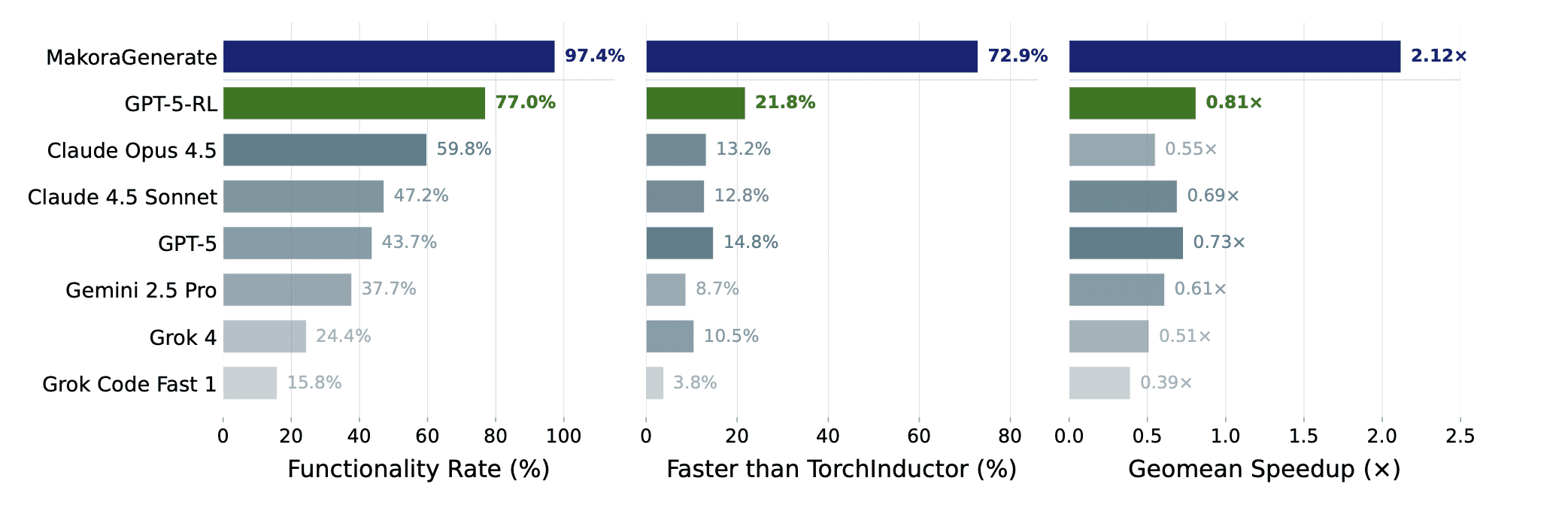

The results are promising. In a single-attempt setting, the GPT-5-RL model improves functional correctness from 43.7% to 77.0%. When integrated into our agent harness, MakoraGenerate, single attempt correctness improved to 97.4% correctness. In terms of performance, we saw similar jumps in performance, improving geomean speedup of TorchInductor-generated kernels from 0.73x, to 0.81x, and ultimately to 2.12x in MakoraGenerate

This is a fast moving field. It feels like we’re seeing a new paper in this space every two weeks. Stay tuned for more information on what kind of tricks worked, what didn’t, and what else we’re doing to improve kernel generation quality.

Latest

From the blog

The latest industry news, interviews, technologies, and resources.