Our products

RESOURCES

Our products

RESOURCES

Introducing MakoraOptimize

Automated hyperparameter optimization for vLLM and SGLang

Introducing MakoraOptimize

Automated hyperparameter optimization for vLLM and SGLang

Introducing MakoraOptimize

Automated hyperparameter optimization for vLLM and SGLang

Introducing MakoraOptimize

Automated hyperparameter optimization for vLLM and SGLang

Real-world gains

MakoraOptimize delivers production-grade inference performance improvements.

88% lower time-to-first-token

on Llama-70B on Nvidia H100

Up to 61% higher throughput

on Llama-3.1-405B with 8× AMD MI300X

63% throughput boost

on Flux.1 Dev on a single AMD MI300X

Real-world gains

MakoraOptimize delivers production-grade inference performance improvements.

88% lower time-to-first-token

on Llama-70B on Nvidia H100

Up to 61% higher throughput

on Llama-3.1-405B with 8× AMD MI300X

63% throughput boost

on Flux.1 Dev on a single AMD MI300X

Real-world gains

MakoraOptimize delivers production-grade inference performance improvements.

88% lower time-to-first-token

on Llama-70B on Nvidia H100

Up to 61% higher throughput

on Llama-3.1-405B with 8× AMD MI300X

63% throughput boost

on Flux.1 Dev on a single AMD MI300X

Real-world gains

MakoraOptimize delivers production-grade inference performance improvements.

88% lower time-to-first-token

on Llama-70B on Nvidia H100

Up to 61% higher throughput

on Llama-3.1-405B with 8× AMD MI300X

63% throughput boost

on Flux.1 Dev on a single AMD MI300X

How it works

Makora's hyperparameter optimization engine makes sense of billions of inference engine configurations, automatically tuning for max performance.

1. Select model

2. Auto-tune vLLM/SGlang

3. Deploy anywhere

How it works

Makora's hyperparameter optimization engine makes sense of billions of inference engine configurations, automatically tuning for max performance.

1. Select model

2. Auto-tune vLLM/SGlang

3. Deploy anywhere

How it works

Makora's hyperparameter optimization engine makes sense of billions of inference engine configurations, automatically tuning for max performance.

1. Select model

2. Auto-tune vLLM/SGlang

3. Deploy anywhere

How it works

Makora's hyperparameter optimization engine makes sense of billions of inference engine configurations, automatically tuning for max performance.

1. Select model

2. Auto-tune vLLM/SGlang

3. Deploy anywhere

It’s as easy as one line of code

It’s as easy as one line of code

It’s as easy as one line of code

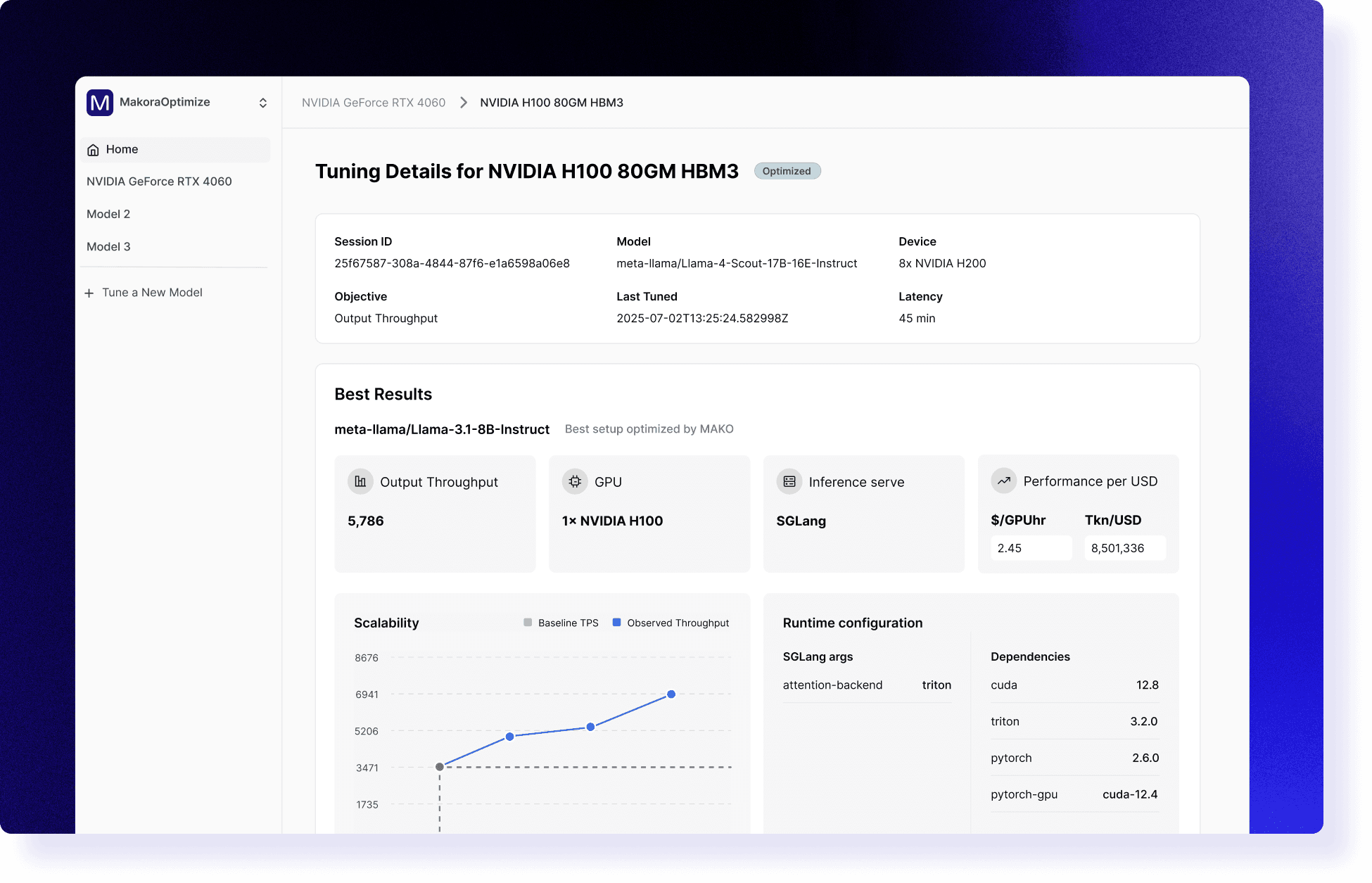

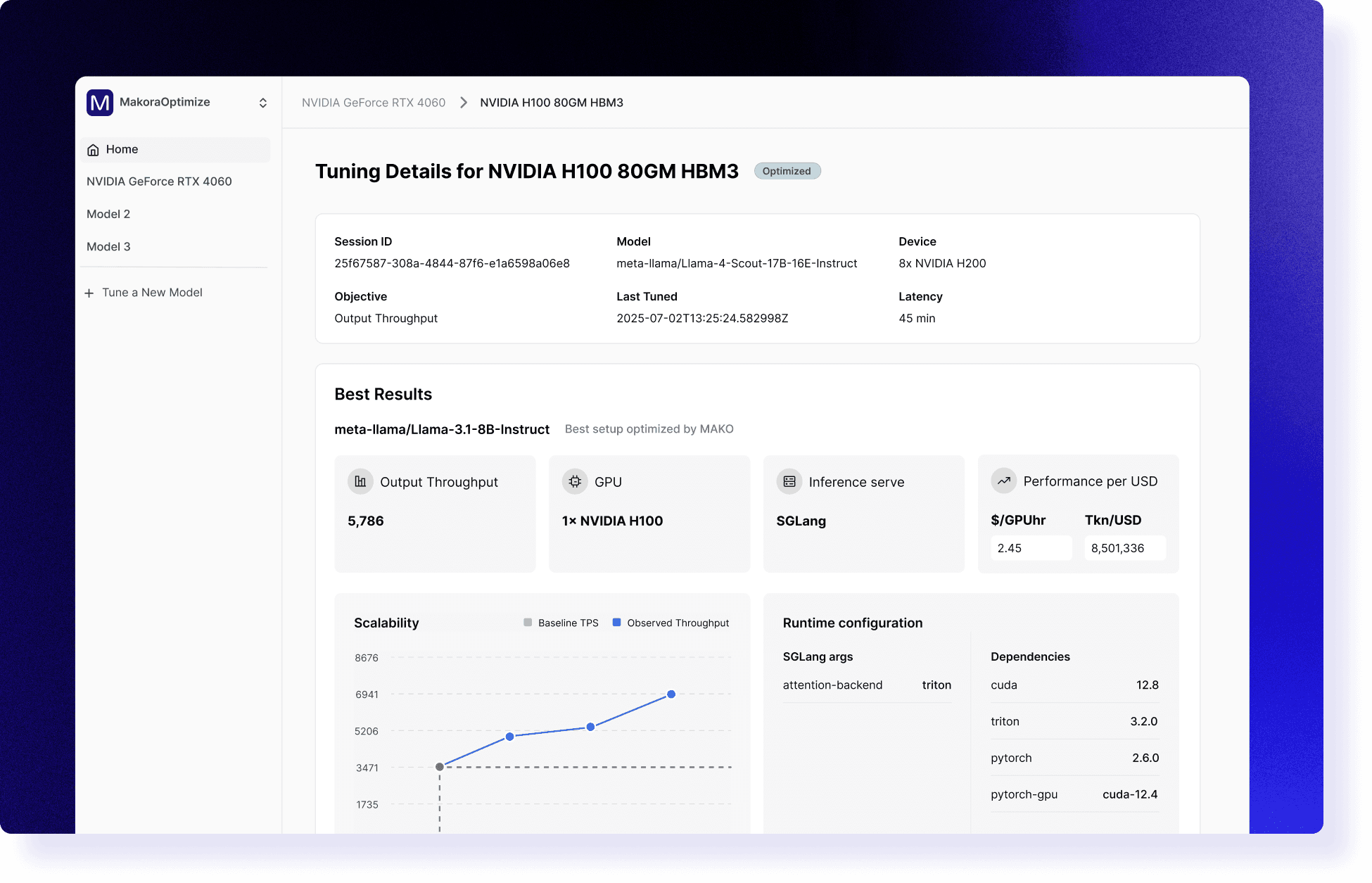

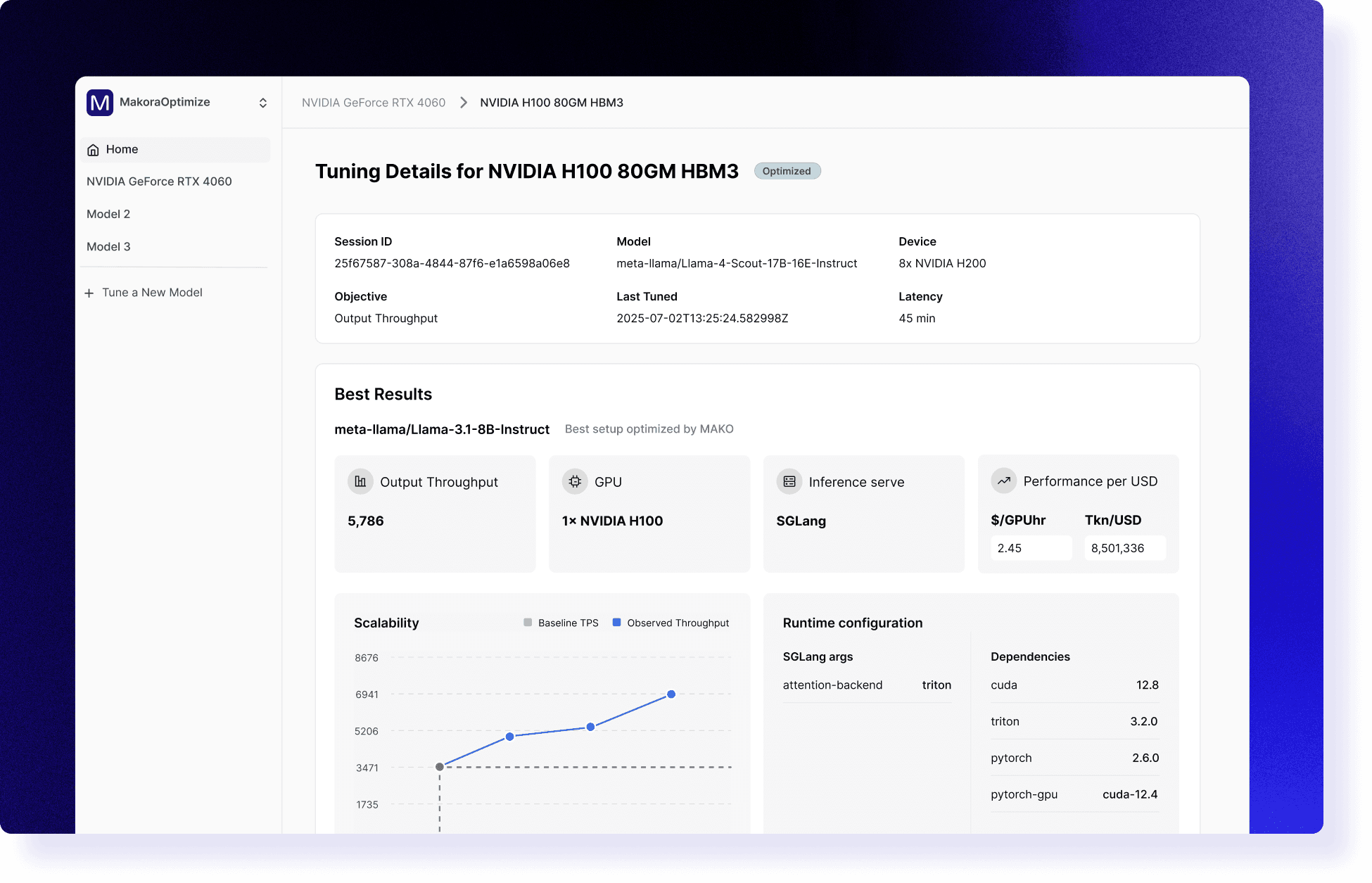

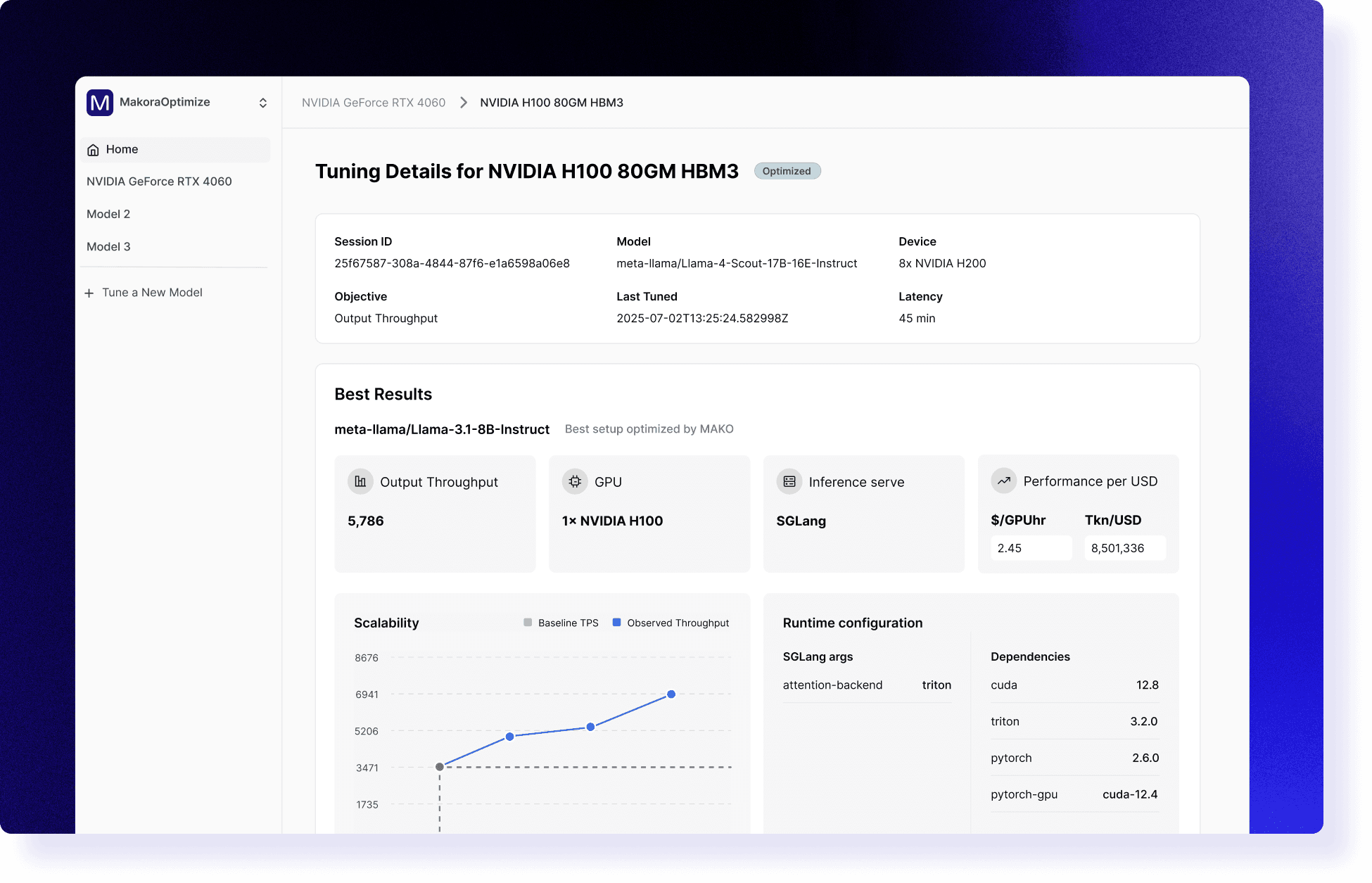

Monitor results in the MakoraOptimize dashboard

Monitor results in the

MakoOptimize dashboard

Monitor results in the MakoraOptimize dashboard

Core Features

Continuous, intelligent optimization

MakoraOptimize runs a 24/7 optimization loop across both the kernel and inference layers—constantly tuning for maximum throughput, lower latency, and better hardware utilization.

Hardware-agnostic and cloud-ready

Supports NVIDIA H100/H200, AMD MI300X, and major cloud platforms with zero vendor lock-in. No code rewrites or proprietary dependencies—run wherever your workloads live.

Built-in benchmarking performance insights

Monitor real-time performance with precision metrics. Understand latency, throughput, and hardware efficiency at every step—with data you can act on.

Seamless plug-and-play Integration

Drop into your existing stack without changing model architecture or inference engines. MakoraOptimize works with vLLM, SGLang, and more—right out of the box.

Core Features

Continuous, intelligent optimization

MakoraOptimize runs a 24/7 optimization loop across both the kernel and inference layers—constantly tuning for maximum throughput, lower latency, and better hardware utilization.

Hardware-agnostic and cloud-ready

Supports NVIDIA H100/H200, AMD MI300X, and major cloud platforms with zero vendor lock-in. No code rewrites or proprietary dependencies—run wherever your workloads live.

Built-in benchmarking performance insights

Monitor real-time performance with precision metrics. Understand latency, throughput, and hardware efficiency at every step—with data you can act on.

Seamless plug-and-play Integration

Drop into your existing stack without changing model architecture or inference engines. MakoraOptimize works with vLLM, SGLang, and more—right out of the box.

Core Features

Continuous, intelligent optimization

MakoraOptimize runs a 24/7 optimization loop across both the kernel and inference layers—constantly tuning for maximum throughput, lower latency, and better hardware utilization.

Hardware-agnostic and cloud-ready

Supports NVIDIA H100/H200, AMD MI300X, and major cloud platforms with zero vendor lock-in. No code rewrites or proprietary dependencies—run wherever your workloads live.

Built-in benchmarking performance insights

Monitor real-time performance with precision metrics. Understand latency, throughput, and hardware efficiency at every step—with data you can act on.

Seamless plug-and-play Integration

Drop into your existing stack without changing model architecture or inference engines. MakoraOptimize works with vLLM, SGLang, and more—right out of the box.

Core Features

Continuous, intelligent optimization

MakoraOptimize runs a 24/7 optimization loop across both the kernel and inference layers—constantly tuning for maximum throughput, lower latency, and better hardware utilization.

Hardware-agnostic and cloud-ready

Supports NVIDIA H100/H200, AMD MI300X, and major cloud platforms with zero vendor lock-in. No code rewrites or proprietary dependencies—run wherever your workloads live.

Built-in benchmarking performance insights

Monitor real-time performance with precision metrics. Understand latency, throughput, and hardware efficiency at every step—with data you can act on.

Seamless plug-and-play Integration

Drop into your existing stack without changing model architecture or inference engines. MakoraOptimize works with vLLM, SGLang, and more—right out of the box.

What our customers say

“Makora’s GPU kernel optimization capabilities and Microsoft Azure’s AI infrastructure makes it easier to scale AI workloads.”

“Makora’s GPU kernel optimization capabilities and Microsoft Azure’s AI infrastructure makes it easier to scale AI workloads.”

Tom Davis

Partner, Microsoft for Startups Program

What kinds of applications benefit from Makora?

Large language models, transformer architectures, and high-throughput inference workloads see significant performance gains. Computer vision models, recommendation systems, and any GPU-bottlenecked application also benefit from automated kernel optimization.

Do I need to know CUDA to use Makora?

Not at all. MakoraOptimize handles all GPU programming complexity automatically. You can describe logic in Python-like syntax or natural language, and Makora handles the rest.

Can Makora be used in production today?

Yes. We're working with early adopters in production environments now. Join the waitlist to get early access and hands-on support.

What kinds of applications benefit from Makora?

Large language models, transformer architectures, and high-throughput inference workloads see significant performance gains. Computer vision models, recommendation systems, and any GPU-bottlenecked application also benefit from automated kernel optimization.

Do I need to know CUDA to use Makora?

Not at all. MakoraOptimize handles all GPU programming complexity automatically. You can describe logic in Python-like syntax or natural language, and Makora handles the rest.

Can Makora be used in production today?

Yes. We're working with early adopters in production environments now. Join the waitlist to get early access and hands-on support.

What kinds of applications benefit from Makora?

Large language models, transformer architectures, and high-throughput inference workloads see significant performance gains. Computer vision models, recommendation systems, and any GPU-bottlenecked application also benefit from automated kernel optimization.

Do I need to know CUDA to use Makora?

Not at all. MakoraOptimize handles all GPU programming complexity automatically. You can describe logic in Python-like syntax or natural language, and Makora handles the rest.

Can Makora be used in production today?

Yes. We're working with early adopters in production environments now. Join the waitlist to get early access and hands-on support.

What kinds of applications benefit from Makora?

Large language models, transformer architectures, and high-throughput inference workloads see significant performance gains. Computer vision models, recommendation systems, and any GPU-bottlenecked application also benefit from automated kernel optimization.

Do I need to know CUDA to use Makora?

Not at all. MakoraOptimize handles all GPU programming complexity automatically. You can describe logic in Python-like syntax or natural language, and Makora handles the rest.

Can Makora be used in production today?

Yes. We're working with early adopters in production environments now. Join the waitlist to get early access and hands-on support.

Products

company

Copyright © 2025 MakoRA. All rights reserved.

Products

company

Copyright © 2025 MakoRA. All rights reserved.

Products

company

Copyright © 2025 MakoRA. All rights reserved.

Products

company

Copyright © 2025 MakoRA. All rights reserved.